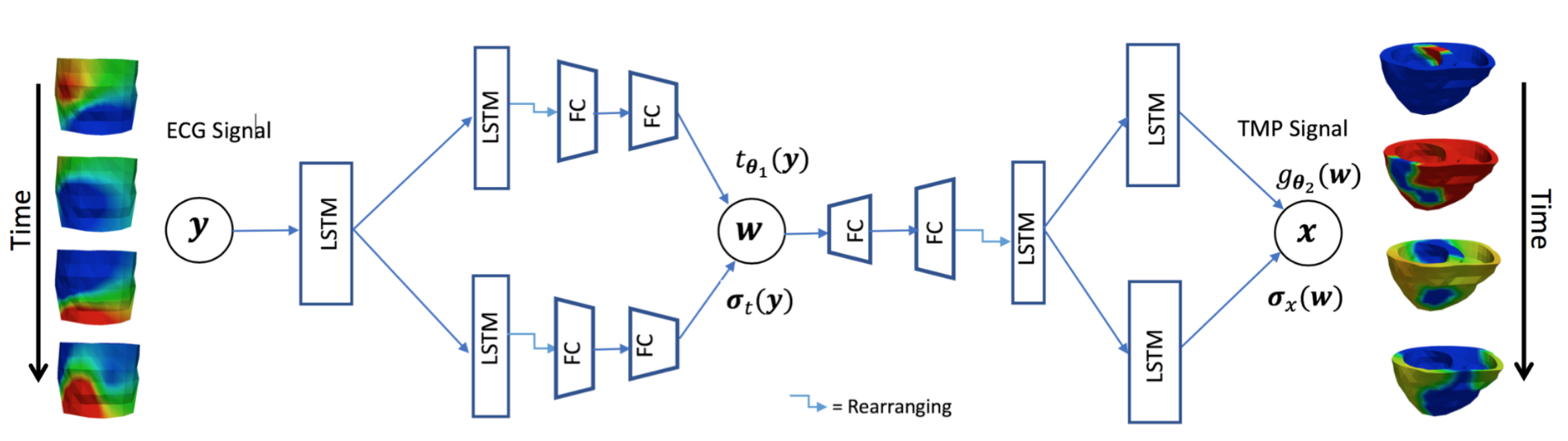

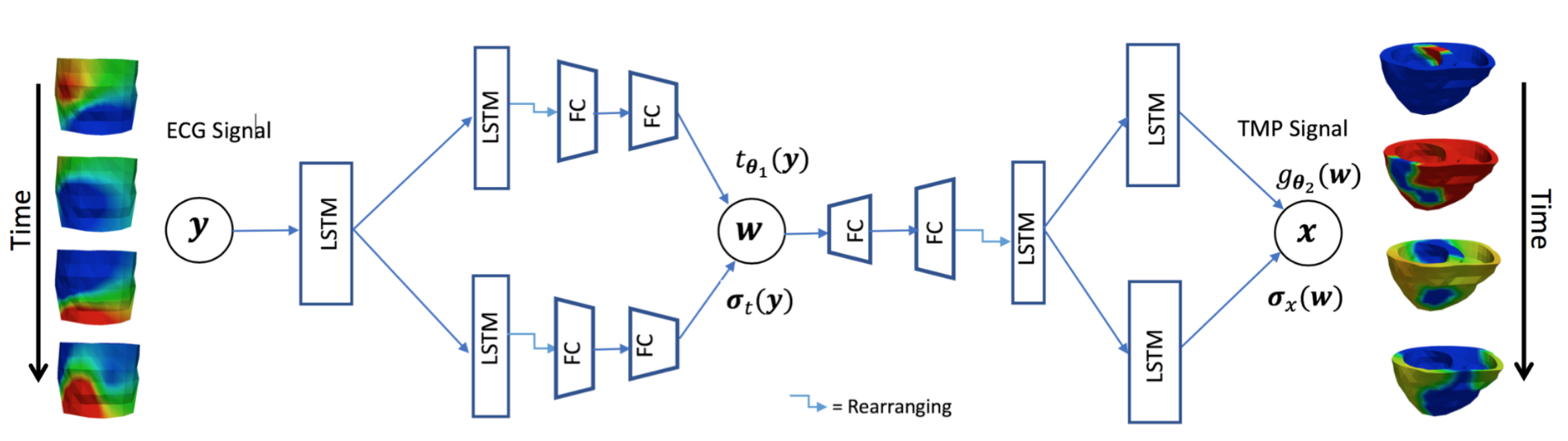

While many deep learning based approaches have been proposed for medical image analysis tasks like image reconstruction and classification, the question of generalization of these deep networks remains elusive and little explored in the medical imaging community. In this paper, we propose two ways to improve generalization of an encoder-decoder reconstruction network. First, drawing from analytical learning theory, we theoretically show that a stochastic latent space will improve the ability of a network to generalize to test data outside the training distribution. Second, based on information bottleneck principle, we show that decreasing the mutual information between the latent space and the input data will help a network generalize to unseen input variations. Subsequently, we present a sequence image reconstruction network optimized by a variational approximation of the information bottleneck principle with stochastic latent space. In the application setting of reconstructing the sequence of cardiac transmembrane potential from body-surface potential, we assess the two types of generalization abilities of the presented network against its deterministic counterpart and demonstrate their efficacy.