Recently I came across this paper called Transformers are RNNs [1]. We all know that cannot be true- transformers were invented to overcome limintations of RNNs and are immensely powerful. So, what could this paper be about? As it turns out the authors are comparing the sequential autoregressive nature in which we can do inference in RNNs, we can do so with Transformers too with some simplifications. However, there is a more important theme to this paper: regarding linear approximation of the attention block, which lies at the heart of this paper. Without further ado, let’s understand these fundamentals one by one.

Linearization of Attention

Let’s look at the attention layer. If is the input, it is first transformed into query, key and value as follows:

where , and transform input sequence of dimension F (vocabulary size) to dimension , and respectively. Then the attention is computed with the following equation:

Computational and Memory Complexity

Both the computational and memory complexity of attention is . To understand why, simply look at the two matrix multiplications in attention in the following figure:

Inside softmax, we need to multiply a matrix with , hence we need to do operations. After softmax, we need to multiply matrix with matrix which is again operations. Thus the computational complexity is . Similarly, the memory complexity is because we need to store the matrix before softmax.

Now, how can we improve this? The first thought that comes to mind is that of linearization. Suppose, we could get rid of softmax operation by transforming the matrices and into some features by passing through a function such that we now get linear multiplication between two matrices. Suppose this function acts on rows of and , i.e. columns of . This is represented in the figure below. Note that to hint the fact that application of could increase the row size of and column size of , we have increased them from 2 (in previous figure) to 3 in this figure.

If we could linearize like this, we could save a lot. For example, we could multiply the right two matrices with sizes and as shown below:

This operation has computational complexity of resulting into a matrix of size . Note that the complexity is if and are less compared to . In practice, is quite large in the order of thousand while and are small in the order of 30. Hence, the asymptotic analysis makes sense. Similarly, in the next step, we multiply the first matrix with size with this new matrix of size , which is again . Therefore, overall compuatational complexity would be and memory complexity would also reduce to .

However, simply replacing softmax of matrix multiplication with another matrix multiplication is not a great idea. For one, softmax involves row normalization which is a critical component of attention; if we simply replace softmax with matrix multiplication, we lose that. Therefore, the authors of this paper[1] propose linearization and then normalization, which is an excellent idea.

Linearization and Normalization

This paper[1] proposes linearization and then normalization. To understand that, first let’s rewrite attention by expanding softmax

where exp is an element-wise exponential operation on the matrix and the division is row-wise normalization of each row of the matrix .

Now, instead of softmax, we can approximate the matrix with , and retain the rowwise normalization. That way, the attention matrix will always have rows summing to 1, which preserves a key property of softmax attention. At the same time, this linearization also enjoys benefits of reducing computational complexity.

With this approximation, now we can rewrite the attention block equation as follows:

This is slight abuse of notation, what we mean by the division line here is that each row of is divided by its corresponding rowsum. To make things more clear and accurate, it is perhaps better to look at the rows of which looks like this:

where and denote rows of and respectively. There is no ambiguity here because the denominator is a scalar, the sum of a row vector obtained by multiplying a row vector with a matrix. This is perhaps clearer with the following diagram:

This strategy does reduce the computational complexity and memory complexity to because we can compute in advance and for each we have to do vector matrix computation that is . The overall complexity scales linearly with the number of rows in which is N.

Existence of Inner Product and Connection to Positive Definite Kernel

Okay, looks neat. But, what about the approximation, we said that we can do the following approximation

Can we do that?

The answer is yes, in some sense. This has some deep connection with the area called kernel methods and Reproducing Kernel Hilbert Space (RKHS). To understand this connection, let’s investigate what is this matrix anyway?

Let’s say

The element of is , i.e. exponent of the inner product between row of and column of . There is a kernel that generates this matrix which takes two vectors and gives a scalar as follows:

The fact that this is a kernel entails many things (see [2]). One thing that it entails is that it is positive definite. If it is a positive definite kernel, then we can always express a kernel as an inner product between two vectors in Hilbert space as follows:

where is a mapping that transforms from Euclidean space to the correct Hilbert space and then we take inner product in that space. Because of this deep connection between kernel and inner product in Hilbert space, we can, in fact, write the matrix as matrix multiplication as follows:

where acts on each rows of matrices .

At this point I want to caution that it is one thing to say there exists an abstract Hilbert space where we can express a kernel as an inner product and a different thing to actually find such a space or the map . In this paper [1], authors use defined as follows: which is not guaranteed to be the Hilbert space mapping we were talking about earlier. So even though replacing by product of two matrices somewhat makes sense, the mapping we get in practice is completely driven by the empirical performance of the approximate transformer obtained with linear attention.

Nevertheless, I find it intruguing that there is this connection between the attention and RKHS theory. This, perhaps, might lead to interesting ways to linearize the attention block in the future. I can already imagine a few of them right now.

But, why are Transformers RNNs?

When the authors said “Transformers are RNN”, they were referring to the sequential autoregressive inference in the RNNs. In RNNs, we can sequentially generate output in an autoregressive fashion. Each input token goes into the neural network block and comes out the output. It turns out that with the above linearization of attention, we can achieve something similar to RNNs in case of Transformers too, but only if we consider the causal attention.

Causal Attention Leads to Recurrence

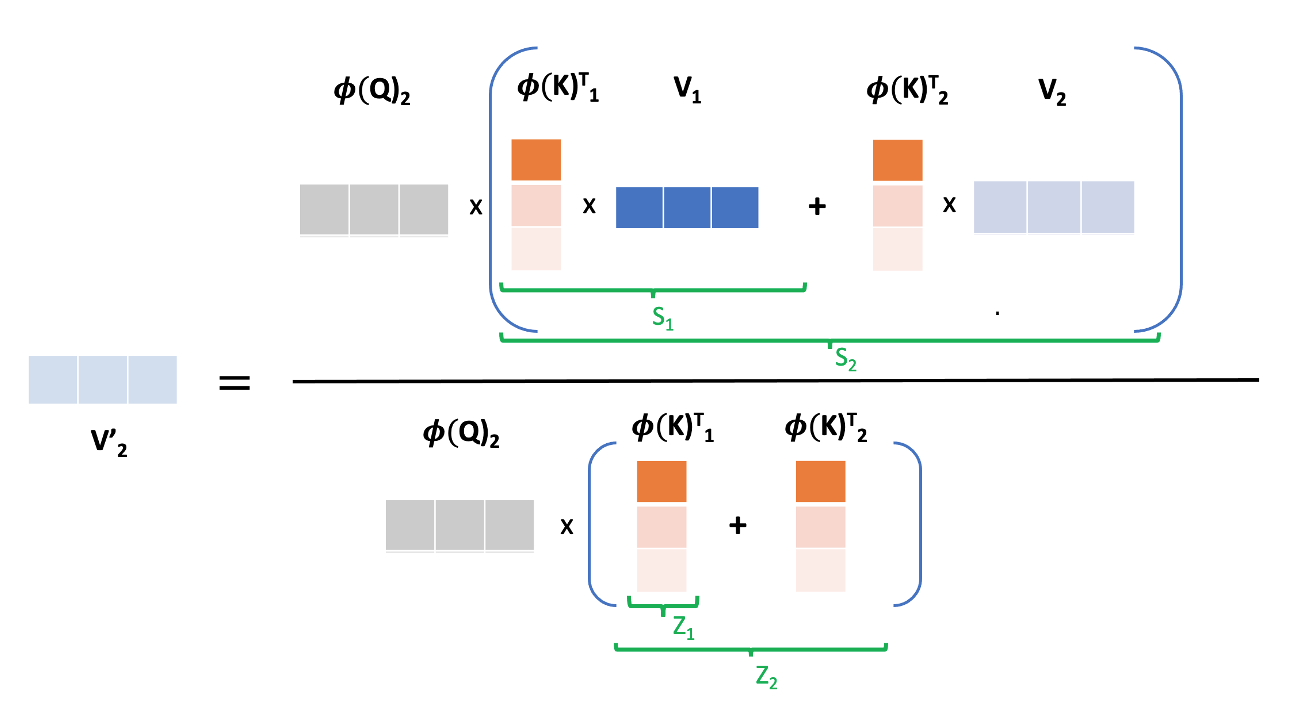

So far, we saw that linearization leads to efficiency in terms of memory and computation. But, if we were to consider causal attention, i.e. the attention that only looks at the previous tokens and nothing from the future, that combined with linearization buys us even more.

In causal attention, before we normalize, we mask out that pre-attention matrix with a lower triangular mask of ones as follows:

where represents pointwise multiplication. Because of this masking, we get a simpler and recurrent relation in computing . To understand that, let’s revisit the expression for

We know that the the matrix product can be expanded as an outer product like , where simply represent column of and row of respectively. Now, because of the masking, we don’t need terms in expansion and as a result, to compute , we just need terms in the outer product as follows:

From here, we can also introduce the recurrent relation by defining and as follows:

This has been clearly illustrated in the following diagram:

Clear Path from Front to End

The transformer architecture is such that it is a per token computation except for the attention block. So, in previous sections, we saw that we can compute output in one token basis from the attention block and it does not require any information about new token in the future. This clears the computation path from the front to the end of transformer because of the following reason:

-

The attention with previous tokens is handled by the recurrent relation of and as shown before. Besides that there are skip connection, instance normalization and MLP that acts on each token independently. This consists of the whole attention layer.

-

Second layer takes the output of first layer and does the same computation. This layer has it’s own and , but for token, we can calculate output of this layer from the output of previous layer as the input to this layer and previously saved and . This means we are clear to compute current output.

-

In this way, as new token comes, we can recurrenlty calculate output of each layer and pass to the next layer until it reaches that last layer where corresponding output is delivered. Hence, we can do overall Transformer decoding sequentially in a streaming fashion in linear computational complexity with respect to the number of tokens . This is what makes the inference similar to RNNs, hence the title.

References

- Katharopoulos, A., Vyas, A., Pappas, N., & Fleuret, F. (2020, November). Transformers are RNNs: Fast autoregressive transformers with linear attention. In International conference on machine learning (pp. 5156-5165). PMLR.

- Gretton, A. https://www.gatsby.ucl.ac.uk/~gretton/coursefiles/lecture4_introToRKHS.pdf